New Research: Estimating Where Flickr Videos Were Shot

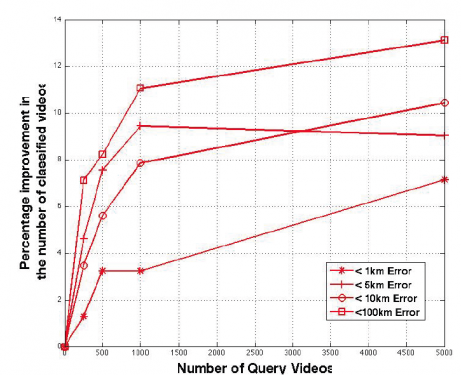

ICSI researchers and their collaborators have developed an algorithm that estimates where random Internet videos were shot by training itself on both videos for which locations have already been identified and those for which they haven’t. It’s the first multimodal location estimation method to use both training and test data to improve its results and, in some case, is over 10 percent more accurate than existing methods. The improvement is particularly high when few training videos are available.

The work was performed on videos selected at random from Flickr but could be applied to videos uploaded to other social media sites such as YouTube and Twitter. A system capable of accurately placing such media would allow users to quickly and easily group related videos in large collections, for example, and might also improve performance-based services.

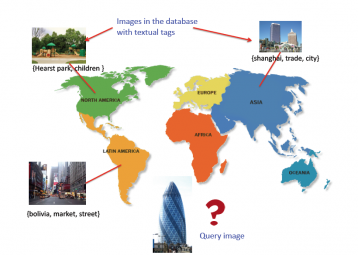

In location estimation of videos, data from videos whose locations are known is used to develop profiles of their respective locations. This data may include text data such as location tags, visual cues such as textures and colors, and sounds such as bird song. The attributes of a test video are then compared against these profiles and its location is estimated.

In location estimation of videos, data from videos whose locations are known is used to develop profiles of their respective locations. This data may include text data such as location tags, visual cues such as textures and colors, and sounds such as bird song. The attributes of a test video are then compared against these profiles and its location is estimated.

However, a new method developed by ICSI researchers and collaborators also compares test videos to each other. Say, for example, that a training set included a video with the tags {berkeley, california}. Existing methods would easily be able to determine where a video with the tags {berkeley, sathergate, california} was shot, but unable to determine where a video with the tags {sathergate, california} was shot since it was not tagged {berkeley}. The new algorithm looks at similarities between the two test videos – in this case, the tags sathergate and california – and applies what it knows about the first to the second.

After the system estimates the locations of the videos, it groups videos by region and analyzes their auditory and visual features to further refine its estimate.

This approach allows researchers to more accurately determine locations when little data is available since test videos are essentially treated as training data. Training data can only comprise photos and videos with known locations – and that’s only about 5 percent of the media uploaded to the Internet, with even less data available for certain regions such as South Africa. By taking advantage of the huge amount of data whose location is unknown, the approach allowed for more than 10 percent improvement in some cases over existing methods.

The results are part of ICSI’s ongoing work in multimodal location estimation, the placing of photos and videos using a variety of indicators such as the text in tags, sounds in audio tracks, and visual cues. In one study, for example, ICSI researchers analyzed the sounds of ambulances from different cities around the world, allowing them to place videos that included an ambulance. Similar work has been done using visual cues such as texture and color.

The results are part of ICSI’s ongoing work in multimodal location estimation, the placing of photos and videos using a variety of indicators such as the text in tags, sounds in audio tracks, and visual cues. In one study, for example, ICSI researchers analyzed the sounds of ambulances from different cities around the world, allowing them to place videos that included an ambulance. Similar work has been done using visual cues such as texture and color.

Related Paper: “Multimodal Location Estimation of Consumer Media: Dealing with Sparse Training Data.” Jaeyoung Choi, Gerald Friedland, Venkatesan Ekambaram, and Kannan Ramchandran. Proceedings of the IEEE International Conference on Multimedia and Expo, Melbourne, Australia, July 2012. Available online at http://www.icsi.berkeley.edu/cgi-bin/pubs/publication.pl?ID=3274 .