Big Data or Expert Annotation - What's Best for Natural Language Processing?

In 2006, Google released a corpus of more than 1 trillion words, making it the world’s largest. The corpus, pulled from Web sites and users’ queries, is used for applications including statistical machine translation, speech recognition, spelling correction, entity detection, and information extraction. Three years later, Google researchers – including Peter Norvig, a former ICSI board member and now the director of research at Google – published a paper titled “The Unreasonable Effectiveness of Data.” They wrote that natural language processing tasks could be accomplished more efficiently and less expensively through statistical means than by methods that rely on human experts to annotate text.

While Google has obviously been successful in NLP, Collin Baker, project manager of FrameNet, believes there are limits to what statistical methods can accomplish. FrameNet relies on a symbolic approach: a complicated model that relies on expert annotation and less data than the statistical methods used by Google to draw meaning from text.

“We believe there are subtleties of meaning you can’t get at in a purely statistical manner,” he said.

Corpuses

In the late 1960s, Brown University released a corpus of 1 million words, the largest at the time. The Brown Corpus included text from 15 different genres, such as fiction and editorial reporting. “It was the first time you had numbers behind the patterns,” Collin said. And the variety of sources made it balanced.

And the corpuses keep getting larger. The British National Corpus now contains about 100 million words and is the largest balanced corpus in use by the NLP community. But with Google’s latest release, Collin said, “Any attempt at balance has been given up. That has its problems.”

The Google researchers admit that “in some ways this corpus is a step backwards from the Brown Corpus: it’s taken from unfiltered Web pages and thus contains incomplete sentences, spelling errors, grammatical errors, and all sorts of other errors.” But, they write in their paper, its size makes up for these problems because it’s able to represent “even very rare aspects of human behavior.”

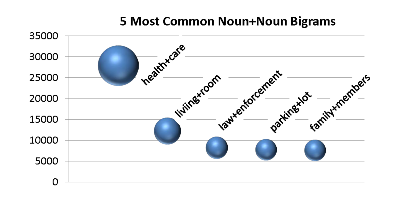

Most common two-noun sequences in the Corpus of Contemporary American English, the largest balanced corpus of American English.

n-Grams

Statistical models are based on n-grams, which are sequences of n words. By collecting data about how often different sequences appear, n-gram models can predict the most likely word to occur next in a phrase. For example, when you see “New York,” the word “city” is more likely to follow than the word “banana.”

“Google has been extremely successful at using n-grams to answer queries,” Collin said. “When you use a lot of data, you do find unexpected combinations.”

Google also applies statistical modeling to machine translation and document summarization and is beginning to use large data sets for its Voice Search and for YouTube transcripts. Last year, Google researchers trained a speech recognition system using a dataset of 230 billion words. The language model, which calculates the probability that the next word in a sentence will be a certain word based on the frequency of word combinations, comprised one million words.

The Symbolic Approach

FrameNet relies heavily on human annotation of text. It’s based on frame semantics work done by Professor Charles Fillmore, the director of the project. Linguists annotate text by hand in order to build a database of language, usable by both humans and machines, that shows words in each of their meanings and that describes the relationships between words. There are now about 170,000 annotated sentences.

The database groups words according to the semantic frames – schematic representations of situation types (like eating, removing, etc.) – that they participate in and describes the patterns in which they combine with other words and phrases according to how frame elements are expressed. Loosely defined, frame elements are the things that are worth talking about within the frame activated by a word. For example, verbs of buying and selling involve a buyer, a seller, some goods, and some money, either explicitly in a sentence or implicitly in a situation, and verbs of revenge involve a prior event, an avenger, an offender, an injured party, and a punishment.

Collin says that’s one aspect of language that statistical modeling can’t really deal with – the complexity of the relationships between the entities involved in a particular event.

Another complexity of language arises from negative statements – for example, “The student left without asking the teacher.” The use of the word “without” suggests that something is wrong, out of the ordinary, or missing. This relationship is difficult to understand through purely statistical means, but easy for a human annotator. The relationship between related texts is also difficult for n-gram models to parse – for example, recognizing that two news reports are about the same event.

And of course FrameNet has its own drawbacks. The hand-annotation of frames is expensive and slow. The database has about 180,000 examples of frames now, but, Collin said, “From the point of view of machine learning, that’s not very much.”

Bridging the Divide

Despite his criticisms of statistical approaches, Collin is optimistic about collaboration among proponents of the two approaches to NLP. “From the symbolic point of view, we’re eager to make the data we produce useful to those who are using statistical approaches.”

And despite the divide in the NLP community between those who prefer simple models with lots of data and those who prefer complex models that rely on human annotation and less data, researchers are starting to realize that both are important for successful NLP. “They feed into each other to a fair extent,” Collin said. “People are starting to realize that you need both.”

Read more:

FrameNet Project Web site. https://framenet.icsi.berkeley.edu/fndrupal/

"The Unreasonable Effectiveness of Data." Alon Halevy, Peter Norvig, and Fernando Pereira. IEEE Intelligent Systems, Vol. 24, No. 2, pp. 8-12, March-April 2009, doi:10.1109/MIS.2009.36. http://www.computer.org/csdl/mags/ex/2009/02/mex2009020008-abs.html